Resources to aid your learning of LLM finetuning learning journey. Guides for starters, intermediate and advamced users. And before you do that, read why efficient finetuning is going to be the key for production read, cost efficient, domain relevant LLMs.

Shortcut to learning media:

As POCs and POEs mature to become production ready platforms, the role of fine-tuning (together with RAG in many cases) becomes key to successful production ready LLM AI platforms / solutions. Finetuning gets to be come key and efficient finetuning the pinnacle of production success.

Two articles crisply shares my opinion. Rather than rehashing, here are the gems -

-

Generative AI — LLMOps Architecture Patterns Debmalya Biswas breaksdown possible architecture patterns into -

-

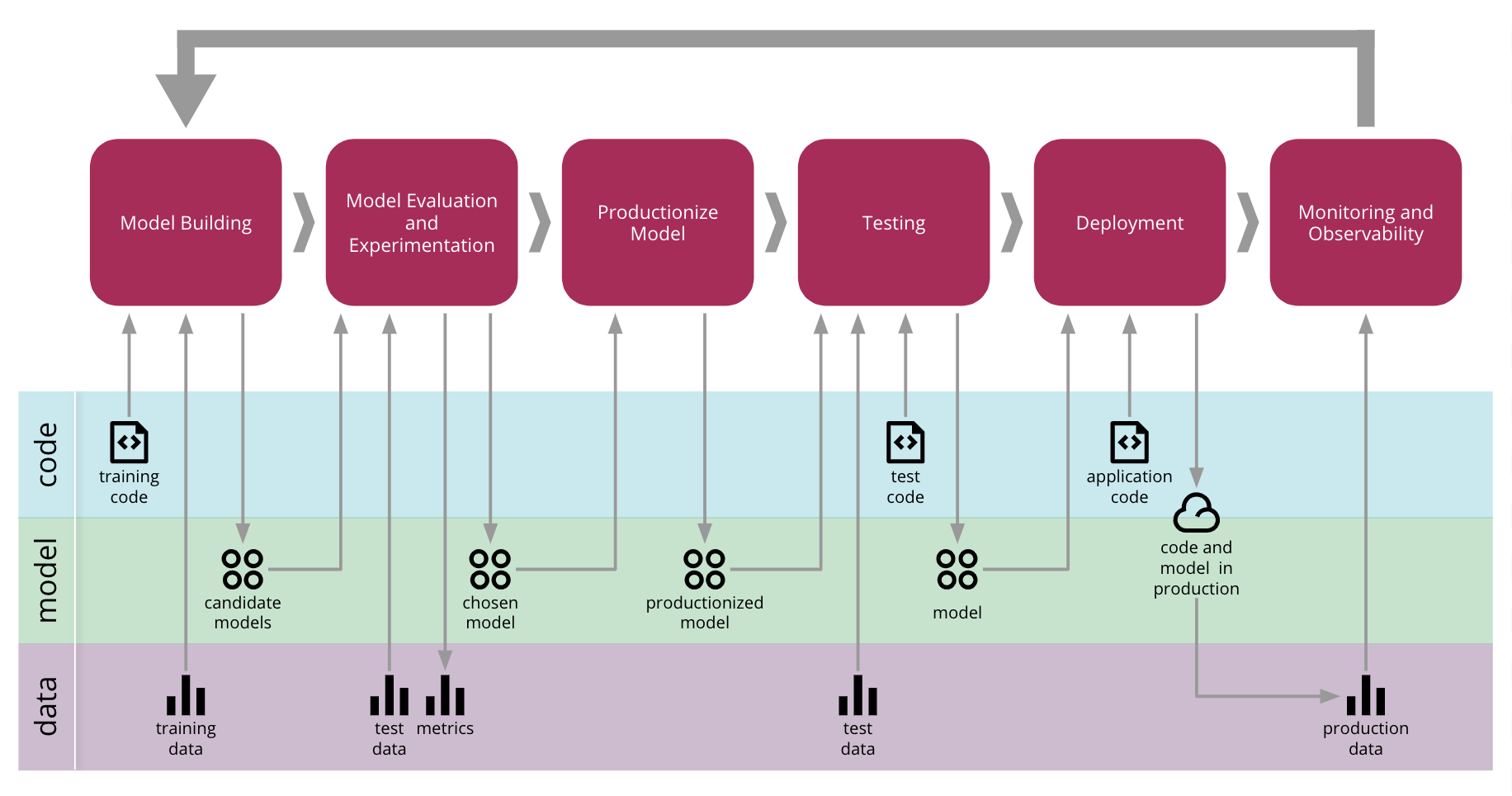

Continuous Delivery for Machine Learning Danilo Sato, Arif Wider, Christoph Windheuser shares CD4ML which delves deep into how to productionize such models.

Also check out the collection of information on how to productionize ML. The biggest jump is from POC to Productionizing, which requires a lot more planning, thoughtful workflows and of course automation - Awesome Production Machine Learning. Important principles - Reproducibility, Orchestration, Explainability.

Combine the pattern from Debmalya (gem 1) with true and tested MLOps / CD4ML (gem 2) for managing enterprise ready LLMOps platforms and solutions.

My bet from architecture patterns is pattern 3, but too early to call! Efficient Fine-Tuning will be the key to production ready cost effective, domain relevant solutions and platform. And the need to learn and train ourselves with finetuning which may be a preferred way of enterprise / production ready cost effective LLM solutions and platforms surfaces to the top!

And to be ready for this upcoming challenge, here is a collection of resources that can help you get a quickstart.

| Content / Blog | Description |

|---|---|

| (Beginner) RAG vs Finetuning | RAG vs Finetuning — Which Is the Best Tool to Boost Your LLM Application? The definitive guide for choosing the right method for your use case |

| (Beginner) RAG, Fine-tuning or Both? | RAG, Fine-tuning or Both? A Complete Framework for Choosing the Right Strategy |

| Optimizing LLMs | Optimizing LLMs: A Step-by-Step Guide to Fine-Tuning with PEFT and QLoRA (A Practical Guide to Fine-Tuning LLM using QLora) |

| Parameter-Efficient Fine-Tuning (PEFT) | PEFT methods only fine-tune a small number of (extra) model parameters, significantly decreasing computational and storage costs because fine-tuning large-scale PLMs is prohibitively costly. |

| A guide to parameter efficient fine-tuning (PEFT) | This article will discuss the PEFT method in detail, exploring its benefits and how it has become an efficient way to fine-tune LLMs on downstream tasks. |

| PEFT: Parameter-Efficient Fine-Tuning of Billion-Scale Models on Low-Resource Hardware | PEFT approaches enable you to get performance comparable to full fine-tuning while only having a small number of trainable parameters. |

| Ludwig - Declarative deep learning framework | Declarative deep learning framework built for scale and efficiency. Ludwig is a low-code framework for building custom AI models like LLMs and other deep neural networks. Learn when and how to use Ludwig! |

| Fine-tuning OpenLLaMA-7B with QLoRA for instruction following | Tried and tested sample - With the availability of powerful base LLMs (e.g. LLaMA, Falcon, MPT, etc.) and instruction tuning datasets, along with the development of LoRA and QLoRA, instruction fine-tuning a base model is increasingly accessible to more people/organizations. |

| Personal Copilot: Train Your Own Coding Assistant | In this blog post we show how we created HugCoder, a code LLM fine-tuned on the code contents from the public repositories of the huggingface GitHub organization. |

| Video | Description |

|---|---|

| Efficient Fine-Tuning for Llama-v2-7b on a Single GPU | This session will equip ML engineers to unlock the capabilities of LLMs like Llama-2 on for their own projects. |

| Efficiently Build Custom LLMs on Your Data with Open-source Ludwig | detail for the video |

| Introduction to Ludwig - multi-model sample | Train and Deploy Amazing Models in Less Than 6 Lines of Code with Ludwig AI |

| Ludwig: A Toolbox for Training and Testing Deep Learning Models without Writing Code | Ludwig, an open source, deep learning toolbox built on top of TensorFlow that allows users to train and test machine learning models without writing code. |

| (Beginner) Build Your Own LLM in Less Than 10 Lines of YAML (Predibase) | Did you know you can start trying Ludwig OpenSource on Azure How you can get started building your own LLM - How declarative ML simplifies model building and training; How to use off-the-shelf pretrained LLMs with Ludwig - the open-source declarative ML framework from Uber ; How to rapidly fine-tune an LLM on your data in less than 10 lines of code with Ludwig using parameter efficient methods, deepspeed and Ray |

| (Beginner) To Fine Tune or Not Fine Tune? That is the question | Ever wondered about the balance between the benefits and drawbacks of fine-tuning models? Curious about when it's the right move for customers? Look no further; we've got you covered! |

| Sample | Description |

|---|---|

| Parameter-Efficient Fine-Tuning (PEFT) | PEFT methods only fine-tune a small number of (extra) model parameters, significantly decreasing computational and storage costs because fine-tuning large-scale PLMs is prohibitively costly. |

| QLoRA: Efficient Finetuning of Quantized LLMs | QLORA - an efficient finetuning approach that reduces memory usage enough to finetune a 65B parameter model on a single 48GB GPU while preserving full 16-bit finetuning task performance |

| Ludwig | Low-code framework for building custom LLMs, neural networks, and other AI models |

| (Beginner) Ludwig 0.8: Hands On Webinar | How integrations with Deepspeed and parameter-efficient fine-tuning techniques make training LLMs with Ludwig fast and easy |

| Fine-tuning LLMs with PEFT and LoRA | PEFT: Parameter-Efficient Fine-Tuning of Billion-Scale Models on Low-Resource Hardware |

| (Beginner) Fine-tuning OpenLLaMA-7B with QLoRA for instruction following | Tried and tested sample - With the availability of powerful base LLMs (e.g. LLaMA, Falcon, MPT, etc.) and instruction tuning datasets, along with the development of LoRA and QLoRA, instruction fine-tuning a base model is increasingly accessible to more people/organizations. |

| Curated list of the best LLMOps tools for developers | An awesome & curated list of the best LLMOps tools for developers. |

| Awesome Production Machine Learning | MLOps tools to scale your production machine learning - This repository contains a curated list of awesome open source libraries that will help you deploy, monitor, version, scale and secure your production machine learning |

| Sample | Description |

|---|---|

| LoRA: Low-Rabm Adaptation of Large Language Models | Train with significantly fewer GPUs and avoid I/O bottlenecks. Another benefit is that we can switch between taskswhile deployed at a much lower cost by only swapping the LoRA weights as opposed to all the parameters |

| QLORA: Efficient Finetuning of Quantized LLMs | QLORA - an efficient finetuning approach that reduces memory usage enough to finetune a 65B parameter model on a single 48GB GPU while preserving full 16-bit finetuning task performance |