The purpose of image captioning is to automatically generate text describing a picture. In the past few years, it has become a topic of increasing interest in machine learning, and advances in this field have resulted in models (depending on which assessment) can score even higher than humans.

I used free gradient notebook of paperspace

- 8 CPUs

- 16 GBs of GPU

- 32 GBs of RAM

$ pip install -r requirements.txt

$ python -m spacy download en

$ python -m spacy download en_core_web_lgFlickr8k dataset It consists of pairs of images and their corresponding captions (there's five captions fo each image).

Can be downloaded directy using links below:

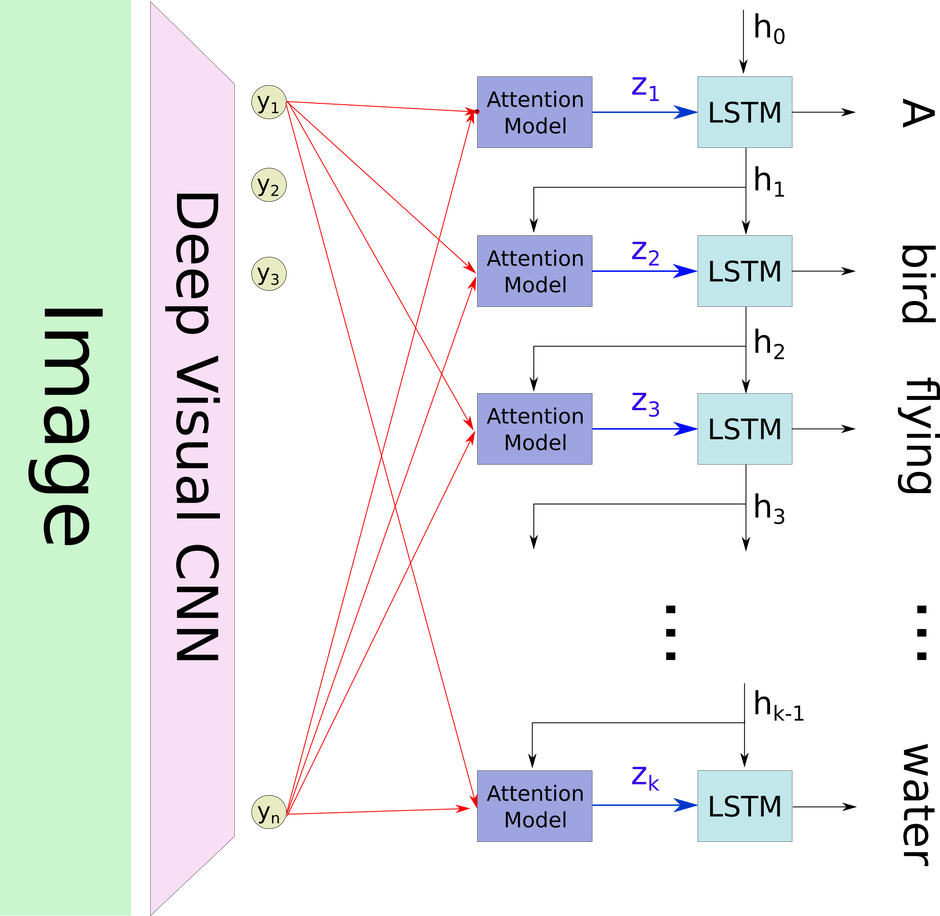

We used the encoder-decoder architecture combined with attention mechanism. The encoder consists in extracting image representations and the decoder consists in generating image captions.

CNN is a class of deep, feed-forward artificial neural networks that has successfully been applied to analyzing visual imagery. A CNN consists of an input layer and an output layer, as well as multiple hidden layers. The hidden layers of a CNN typically consist of convolutional layers, pooling layers, fully connected layers, and normalization layers. CNN also has many applications such as image and video recognition, recommender systems, and natural language processing.

We fine tuned the pretrained ResNet model without the last two layers.

LSTM is a basic deep learning model and capable of learning long-term dependencies. A LSTM internal unit is composed of a cell, an input gate, an output gate, and a forget gate. LSTM internal units have hidden state augmented with nonlinear mechanisms to allow state to propagate without modification, be updated, or be reset, using simple learned gating functions. LSTM work tremendously well on various problems, such as natural language text compression, handwriting recognition, and electric load forecasting.

As the name suggests, the attention module on each step of the decoder, uses direct connection to the encoder to focus on a particular part of the source image.

- Number of training parameters: 136,587,749

- Learning rate: 3e-5

- Teacher forcing ratio: 0. This means we don't train using true captions but the previous generated caption.

- Number of epochs: 15

- Batch size: 32

- Loss function: Crossentropy

- Optimizer: RMSProp

- Metrics: Top5 accuracy & BLEU (more below)

It compares the machine-generated captions to one or several human-written caption(s), and computes a similarity score based on:

- N-gram precision (we use 4-grams here)

- Plus a penalty for too-short system translations

While training, we use greedy decoding by taking the argmax. But the problem with this method is that there's no way to undo decision. Instead, we use in inference mode the beam search technique. On each step of decoder, we keep track of the k most probable partial captions (which we call hypotheses); k is the beam size (here 5). Neverthless, using beam search does not guaranteed finding optimal solution.

- Olah, C., & Carter, S. (2016). Attention and Augmented Recurrent Neural Networks.

- Papineni, K., Roukos, S., Ward, T., & Zhu, W. (2002). Bleu: a Method for Automatic Evaluation of Machine Translation. ACL.

- Jay Alammar - Visualizing A Neural Machine Translation Model (Mechanics of Seq2seq Models With Attention) - link